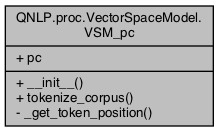

QNLP.proc.VectorSpaceModel.VSM_pc Class Reference

Collaboration diagram for QNLP.proc.VectorSpaceModel.VSM_pc:

Public Member Functions | |

| def | __init__ (self) |

| def | tokenize_corpus (self, corpus, proc_mode=0, stop_words=True, use_spacy=False) |

Data Fields | |

| pc | |

Private Member Functions | |

| def | _get_token_position (self, tagged_tokens, token_type) |

Detailed Description

Definition at line 27 of file VectorSpaceModel.py.

Constructor & Destructor Documentation

◆ __init__()

| def QNLP.proc.VectorSpaceModel.VSM_pc.__init__ | ( | self | ) |

Definition at line 28 of file VectorSpaceModel.py.

Member Function Documentation

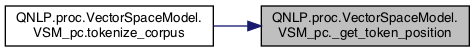

◆ _get_token_position()

|

private |

Tracks the positions where a tagged element is found in the tokenised corpus list. Useful for comparing distances. If the key doesn't initially exist, it adds a list with a single element. Otherwise, extends the list with the new token position value.

Definition at line 89 of file VectorSpaceModel.py.

Referenced by QNLP.proc.VectorSpaceModel.VSM_pc.tokenize_corpus().

Here is the caller graph for this function:

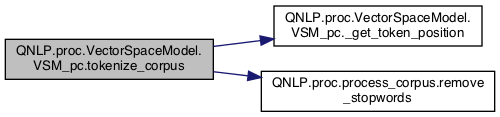

◆ tokenize_corpus()

| def QNLP.proc.VectorSpaceModel.VSM_pc.tokenize_corpus | ( | self, | |

| corpus, | |||

proc_mode = 0, |

|||

stop_words = True, |

|||

use_spacy = False |

|||

| ) |

Rewrite of pc.tokenize_corpus to allow for tracking of basis word positions in list to improve later pairwise distance calculations.

Definition at line 31 of file VectorSpaceModel.py.

64 #spacy_pos_tagger = 2000000 #Uses approx 1GB memory for each 100k tokens; assumes large memory pool

87 return {'verbs':count_verbs, 'nouns':count_nouns, 'tk_sentence':token_sents, 'tk_words':token_words}

def remove_stopwords(text, sw)

Definition: process_corpus.py:19

def tokenize_corpus(corpus, proc_mode=0, stop_words=True)

Definition: process_corpus.py:25

References QNLP.proc.VectorSpaceModel.VSM_pc._get_token_position(), QNLP.proc.VectorSpaceModel.VSM_pc.pc, and QNLP.proc.process_corpus.remove_stopwords().

Here is the call graph for this function:

Field Documentation

◆ pc

| QNLP.proc.VectorSpaceModel.VSM_pc.pc |

Definition at line 29 of file VectorSpaceModel.py.

Referenced by QNLP.proc.VectorSpaceModel.VectorSpaceModel.load_tokens(), and QNLP.proc.VectorSpaceModel.VSM_pc.tokenize_corpus().

The documentation for this class was generated from the following file:

- /Users/mlxd/Desktop/intel-qnlp-rc2/modules/py/pkgs/QNLP/proc/VectorSpaceModel.py