Functions | |

| def | remove_stopwords (text, sw) |

| def | tokenize_corpus (corpus, proc_mode=0, stop_words=True) |

| def | load_corpus (corpus_path, proc_mode=0) |

| def | process (corpus_path, proc_mode=0) |

| def | word_pairing (words, window_size) |

| def | num_qubits (words) |

| def | mapNameToBinaryBasis (words, db_name, table_name="qnlp") |

| def | run (BasisPath, CorpusPath, proc_mode=0, db_name="qnlp_tagged_corpus") |

Variables | |

| sw | |

Function Documentation

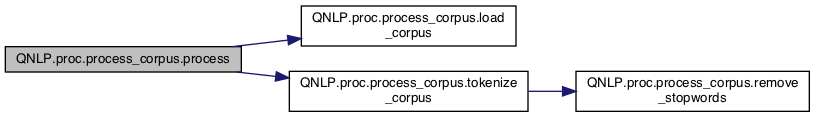

◆ load_corpus()

| def QNLP.proc.process_corpus.load_corpus | ( | corpus_path, | |

proc_mode = 0 |

|||

| ) |

Load the corpus from disk.

Definition at line 65 of file process_corpus.py.

Referenced by QNLP.proc.process_corpus.process().

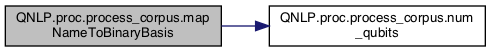

◆ mapNameToBinaryBasis()

| def QNLP.proc.process_corpus.mapNameToBinaryBasis | ( | words, | |

| db_name, | |||

table_name = "qnlp" |

|||

| ) |

Maps the unique string in each respective space to a binary basis number for qubit representation. Keyword arguments: words -- list of the tokenized words db_name -- name of the database file table_name -- name of the table to store data in db_name

Definition at line 135 of file process_corpus.py.

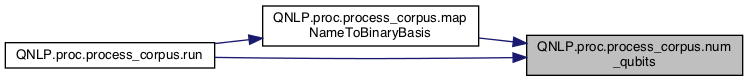

References QNLP.proc.process_corpus.num_qubits().

Referenced by QNLP.proc.process_corpus.run().

◆ num_qubits()

| def QNLP.proc.process_corpus.num_qubits | ( | words | ) |

Calculate the required number of qubits to encode the NVN state-space of the corpus. Returns tuple of required states and number of needed qubits. While it is possible to reduce qubit count by considering the total required number as being the product of each combo, it is easier to deal with separate states for nouns and verbs, with at most 1 extra qubit required. Keyword arguments: words -- list of the tokenized words

Definition at line 116 of file process_corpus.py.

Referenced by QNLP.proc.process_corpus.mapNameToBinaryBasis(), and QNLP.proc.process_corpus.run().

◆ process()

| def QNLP.proc.process_corpus.process | ( | corpus_path, | |

proc_mode = 0 |

|||

| ) |

Tokenize the corpus data.

Definition at line 72 of file process_corpus.py.

References QNLP.proc.process_corpus.load_corpus(), and QNLP.proc.process_corpus.tokenize_corpus().

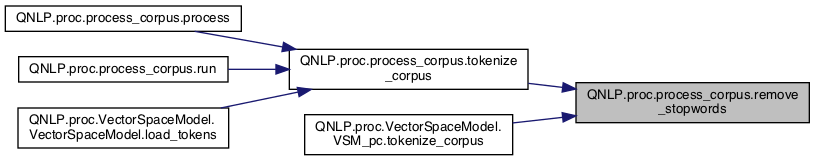

◆ remove_stopwords()

| def QNLP.proc.process_corpus.remove_stopwords | ( | text, | |

| sw | |||

| ) |

Remove words that do not add to the meaning; simplifies sentences

Definition at line 19 of file process_corpus.py.

Referenced by QNLP.proc.process_corpus.tokenize_corpus(), and QNLP.proc.VectorSpaceModel.VSM_pc.tokenize_corpus().

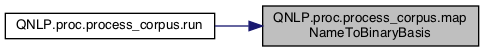

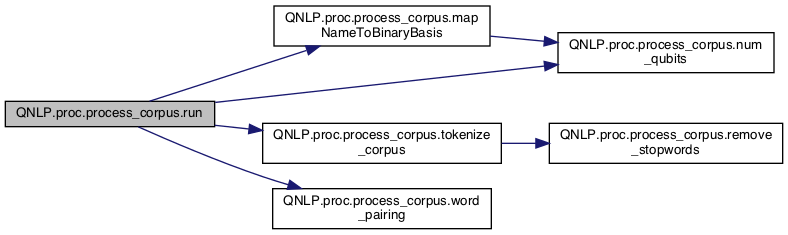

◆ run()

| def QNLP.proc.process_corpus.run | ( | BasisPath, | |

| CorpusPath, | |||

proc_mode = 0, |

|||

db_name = "qnlp_tagged_corpus" |

|||

| ) |

Definition at line 167 of file process_corpus.py.

References QNLP.proc.process_corpus.mapNameToBinaryBasis(), QNLP.proc.process_corpus.num_qubits(), QNLP.proc.process_corpus.tokenize_corpus(), and QNLP.proc.process_corpus.word_pairing().

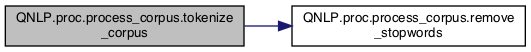

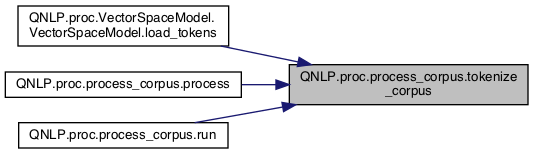

◆ tokenize_corpus()

| def QNLP.proc.process_corpus.tokenize_corpus | ( | corpus, | |

proc_mode = 0, |

|||

stop_words = True |

|||

| ) |

Pass the corpus as a string, which is subsequently broken into tokenized sentences, and returned as dictionary of verbs, nouns, tokenized words, and tokenized sentences. Keyword arguments: corpus -- string representing the corpus to tokenize proc_mode -- defines the processing mode. Lemmatization: proc_mode=\"l\"; Stemming: proc_mode=\"s\"; No additional processing: proc_mode=0 (default=0) stop_words -- indicates whether stop words should be removed (False) or kept (True) (from nltk.corpus.stopwords.words(\"english\"))

Definition at line 25 of file process_corpus.py.

References QNLP.proc.process_corpus.remove_stopwords().

Referenced by QNLP.proc.VectorSpaceModel.VectorSpaceModel.load_tokens(), QNLP.proc.process_corpus.process(), and QNLP.proc.process_corpus.run().

◆ word_pairing()

| def QNLP.proc.process_corpus.word_pairing | ( | words, | |

| window_size | |||

| ) |

Examine the words around each verb with the specified window size, and attempt to match the NVN pattern. The window_size specifies the number of values around each verb to search for the matching nouns. If passed as tuple (l,r) gives the left and right windows separately. If passed as a scalar, both values are equal. Keyword arguments: words -- list of the tokenized words window_size -- window to search for word pairings. Tuple

Definition at line 79 of file process_corpus.py.

Referenced by QNLP.proc.process_corpus.run().

Variable Documentation

◆ sw

| QNLP.proc.process_corpus.sw |

Definition at line 15 of file process_corpus.py.